As AI’s influence on individuals and society increases, so, too, do calls for the AI industry to assume greater responsibility for the impact of their products. Consequently, recent years have seen a growing number of organizations adopting responsible AI principles and frameworks. Though these principles may vary from one organization to another, almost all of them include “explainability” — that is, making AI systems more understandable to humans — as a key requirement for responsibly developing AI.

One proposed approach to explainability is the use of XAI (explainable AI) tools. Broadly, XAI tools are algorithmic tools intended to increase the explainability of ML (machine learning) systems. This can be done by helping users better understand the rationale for an ML model’s predictions, its strengths and weaknesses, or how it will behave in the future. In recent years, a wave of such tools have been developed. With so many different tools available, however, how can AI/ML practitioners know which XAI tools are appropriate for their purposes?

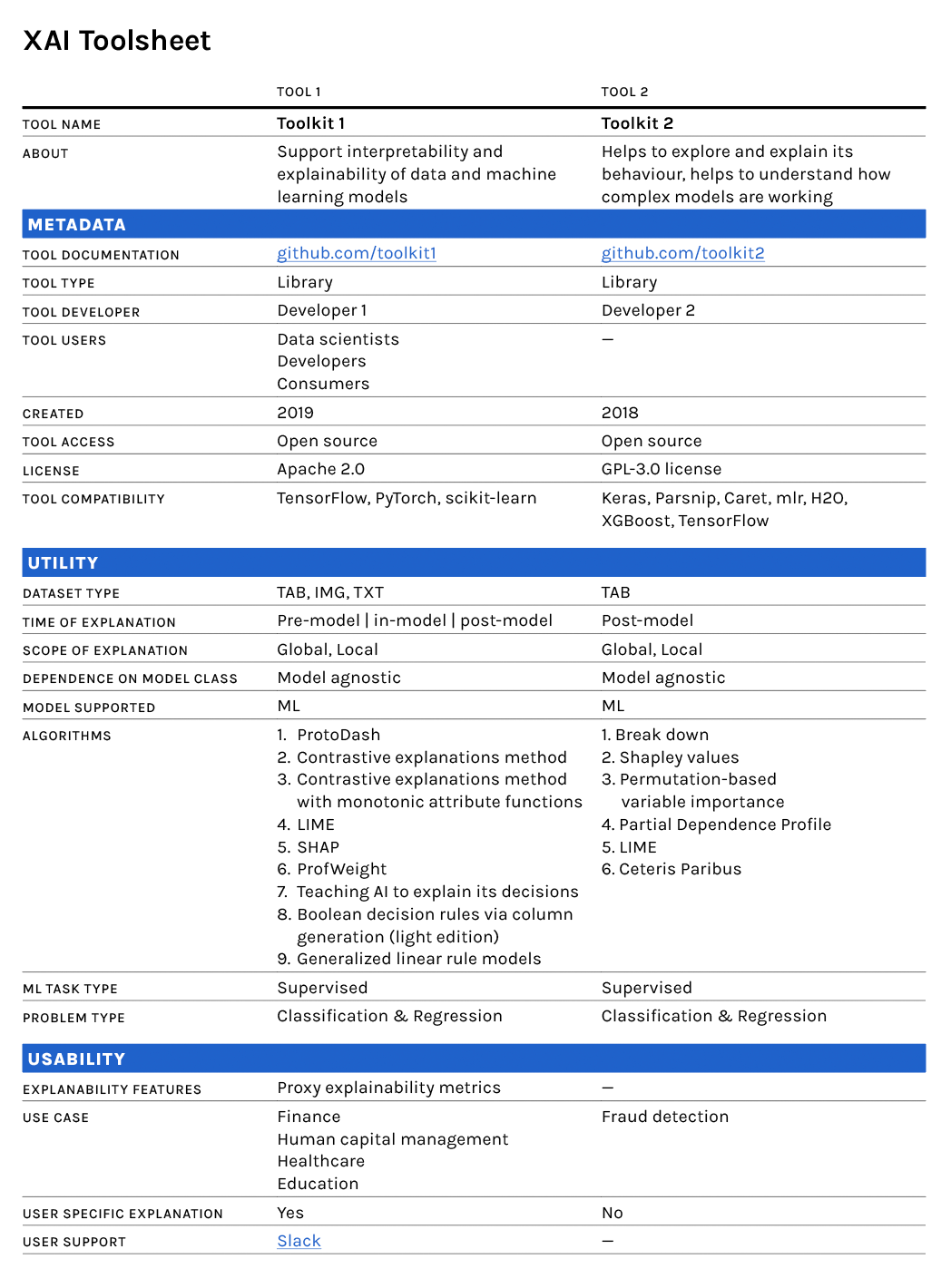

Inspired by similar efforts to document algorithms themselves, Partnership on AI’s (PAI) ABOUT ML Program has been developing the “XAI Toolsheet,” a framework and template for documenting the most relevant features of XAI tools. This is intended to help practitioners easily compare XAI tools and guide them through the selection process. To create such a toolset, however, it is necessary to first identify the relevant features of XAI tools that need to be documented so a practitioner can assess them for functionality and usefulness. Read on to learn more about our research on this topic and why it is needed to advance the usability of XAI tools.

A Crowded Landscape of XAI Tools

Each year, the number of available XAI tools (developed by both academics and industry practitioners) grows, resulting in more options than ever for those interested in using them. Here, we define “XAI tool” broadly to mean any means for directly implementing an explainability algorithm. In our initial research, PAI identified more than 150 XAI-related tools published between 2015 and 2021.

Previous studies have warned of “choice overload,” the phenomenon where too many options lead people to make poor choices with undesirable outcomes or completely avoid making choices at all. In the AI context, undesirable outcomes can be relatively innocuous (like a bad movie recommendation) but they can also be severe (like misdiagnosing an illness), which makes good choices particularly important in this field. Furthermore, with so many tools available, there is a higher risk that choosing an XAI tool will become a box-checking exercise. Faced with so many choices, it may be easier to pick an XAI tool as simple proof of an effort to explain ML model results — as opposed to carefully considering which one will actually aid responsible AI development.

In the AI context, undesirable outcomes can be relatively innocuous (like a bad movie recommendation) but they can also be severe (like misdiagnosing an illness)

While picking an XAI tool out of the crowd might be difficult, making a fully informed choice poses the opposite problem. With no single, central database of XAI tools, PAI had to pull information from a variety of different sources to perform our review. These sources included peer-reviewed journal and conference papers, non-peer-reviewed repositories such as arXiv, industry blog posts, and GitHub repositories dedicated to both single tools and curated lists of tools.

For practitioners to successfully select a useful XAI tool, they must be able to assess the tool’s ability to provide domain-appropriate explainability, understand its limitations, and have a clear idea about how it can be used to produce meaningful information about ML model predictions. Currently, it is difficult for practitioners to compare the properties of XAI tools. Furthermore, there is limited research on the properties of XAI tools a practitioner would even need to know in order to choose one for their desired task.

Our Research

Against this background, PAI has been researching what XAI tool features need to be documented so they can be effectively assessed for usefulness and functionality. This research will serve as the basis of the XAI Toolsheet, a documentation initiative that allows practitioners to compare XAI tools across 22 dimensions to guide them in their selection process.

In creating this documentation framework, we were inspired by existing efforts to make the ML systems transparent and accountable through documentation. These include Gebru et al.’s Datasheets for Datasets, Bender and Friedman’s Data Statements, Holland et al.’s Data Nutrition Label, Pushkarna et al.’s Data Cards, Mitchell et al.’s Model Cards, Mathew et a.l’s FactSheets, and Procope et al.’s System Cards. While sharing similar aspirations to organize information to make ML systems more transparent, PAI’s XAI Toolsheet is distinguished from this prior work by its focus on the properties of XAI tools (as opposed to those specific to datasets or ML models). Through our original research and concepts taken from the field of UX (user experience) design, we arrived at 22 dimensions of XAI tools that would be most useful for practitioners to know when comparing them. We further classified these 22 dimensions as falling under three major categories: metadata, utility, and usability. Additionally, we propose a one-page summary format, called the XAI Toolsheet, that each XAI tool’s dimensions should be presented in.

A visual prototype of the XAI Toolsheet for illustration purposes only. PAI plans to validate and refine the content and format of the XAI Toolsheet with XAI tool developers and users.

In this context, we use “metadata” to mean short explanations providing basic information, identifying eight metadata dimensions of XAI tools that can be used to summarize their properties and make it easier for practitioners to find and use them. For “utility,” we adopt the UX design definition of utility as the functionality to perform the required task. Since the main function of XAI tools is to successfully integrate XAI methods into their users’ projects, we identify eight utility dimensions of XAI tools that will provide the functional information necessary to implement XAI methods. Finally, we define “usability” as the ease with which a person can accomplish a given task with a given product. Since the main purpose of XAI tools is extracting explanations from AI models, we identify six usability dimensions of XAI tools related to enabling users to understand these explanations.

Category 1: Metadata

| Dimension | Definition |

| Tool Type | The deployment formats (including packages and platforms) the tool is offered in. This information enables tool users to assess the technical capabilities of a given tool against the needs of their project. |

| Tool Developers | The individuals or organizations who created the tool. Users may see some tools as more credible than others given differences in developer reputation. |

| Tool Users | The intended users of the tool, such as data scientists or business managers. Knowing this helps users assess the level of technical expertise needed to understand the explanations provided by the tool. |

| Tool Developed Year | The year the tool was released. This information may be useful to both users who associate new tools with state-of-the-art capabilities and users who wish to use more-established tools. |

| Type of Tool Access | Cost and support information about the tool, including whether it is open-source or proprietary. Knowing this may help users compare the monetary cost of tools and the availability of support services for them. |

| Tool License | Ownership information about the tool and specifications for its use. The legal conditions of using a tool may be especially important to know in commercial applications. |

| Tool Documentation | Details about the tool’s installation and configuration requirements. This enables users to assess what is needed to implement different tools. |

| Tool Compatibility | The tool’s capability to integrate with ML models built on various libraries, platforms, notebooks, and cloud-based environments. Knowing this will help users assess the appropriateness of the tools for their ML model. |

Category 2: Utility

| Dimension | Definition |

| Type of Dataset | The nature of the dataset used to build the tools (e.g. Text, Image, tabular, time series). This will help assess whether a tool can handle a particular dataset. |

| Time of Explanation | The stages where explanations are offered in an ML lifecycle. Can aid to assess whether tools offer data explanation, along with white box explanations and black box explanations |

| Scope of Explanation | Whether the explainability algorithm offered by the tools supports explanations for individual predictions (i.e., local) or the entire model behavior (i.e., global). Aids users to assess explanation scope offered by the tools |

| Dependence on Model Class | Whether algorithms used to provide explanations are dependent on the specific ML model (model-specific) or independent of the ML model (model-agnostic). |

| Type of ML Model | The type of ML model supported e.g. deep learning, linear models |

| Types of Explanation Algorithm | The specific XAI algorithms provided by the tool |

| Type of ML task | Types of tasks performed by ML algorithms such as (semi) supervised learning, unsupervised learning, reinforcement learning |

| Problem Type | Supported problem types of supervised and unsupervised learning algorithms e.g. classification (supervised), clustering (unsupervised) |

Category 3: Usability

| Dimension | Definition |

| Explanation Type | The technical (e.g. summary statistics) and non-technical formats (e.g. plain english explanations) in which the explanations are available to the users |

| Explainability Enhancing Features | Additional explainability metrics and attributes that make explanations more human interpretable |

| User Specific Explanations | Ability to customize explanations based on the ML stakeholders’ profile |

| Explanation Documentation | Capabilities to automatically provide documentation of the explanations |

| Use case | Information about the scope of tool in a particular application domain |

| Guidance for use | The support provided to choose the explanation algorithms |

Next Steps

While the XAI Toolsheet is being developed with industry practitioners in mind, we believe it will be useful to many different audiences. This includes researchers, engineers, product managers, regulators, and anyone else who is interested in what properties to look for in an XAI tool. Furthermore, the XAI Toolsheet may enable tool developers to critically evaluate their tools for redundancy and help highlight the unique value proposition of their tools.

As a best practice, we believe the XAI tool community should start including single-page summaries documenting the most relevant features of their tools. PAI’s XAI Toolsheet, which gives an overview of an XAI tool according to the 22 dimensions above, offers a format for doing so — one that can be further refined and expanded for utility.

For our future work, PAI plans to validate the proposed dimensions and format of the XAI Toolsheet with developers and users of XAI tools, determining how useful they find them in practice. We also plan to publish a database of XAI Toolsheets for the 152 XAI tools we reviewed in our research.

To learn more about our work with XAI tools as well as trends, gaps, and opportunities in the space, subscribe to our mailing list here. And if you are a tool user, tool developer, or XAI researcher who would like to contribute to this work as we look to refine the XAI Toolsheet, please sign up to attend our XAI Toolsheet workshop on July 12 here.

Additionally, we are working to increase the breadth of our work to include fairness, privacy, and security tools that will aid in the development and implementation of responsible AI projects. As we continue to validate our XAI Toolsheet research, we are particularly enthusiastic to meaningfully complement the Organisation for Economic Co-operation and Development’s (OECD) work focusing on creating interactive databases for various AI tools, practices, and approaches for implementing trustworthy AI. Since the tool dimensions identified in our work align with the OECD’s framework of trustworthy AI tools (which aims to help collect, structure, and share information on the latest tools), we hope to meaningfully contribute and collaborate by sharing our database of tools with interested parties.

Acknowledgments

We would like to thank Sarah Newman, who shared the initial tools dataset and provided constructive suggestions during the planning and development of this research work, for her contributions. We would also like to thank Muhammad Ali Chaudhry for valuable feedback that further strengthened our work.

![$hero_image['alt']](https://partnershiponai.org/wp-content/uploads/2022/06/AI-cans_blue-alt.png)